One of the initial fears regarding generative artificial intelligence was that programmers would lose their jobs. While some still hold this view (Zinkula & Mok 2024), others (Lightner 2023; Strzelecki 2023) have taken an opposite stance: the availability of new tools will increase the need for coders, which is what has happened with every new tool since software engineering was established as an independent field. There is actually an ongoing global shortage of developers (Trienpont International 2023).

Pathfinder (2024) is a project at LAB in collaboration with partners around Europe. One of the goals of the project is to enhance the comprehension of AI technology among students. In this IT students are an interesting case as code produced by an AI can be easily tested unlike many other answers these tools can provide.

The student perspective

At LAB, the second year IT and BIT students are encouraged to experiment with AI in various ways as using it will likely be a part of their job. A typical instruction on these courses is that use of AI is allowed but should be reported for the purposes of mutual learning. This follows Arene’s (2023) recommendations, but the course instructions include an additional warning that AI tools might seem good, but their use also requires a lot of understanding from the programmer.

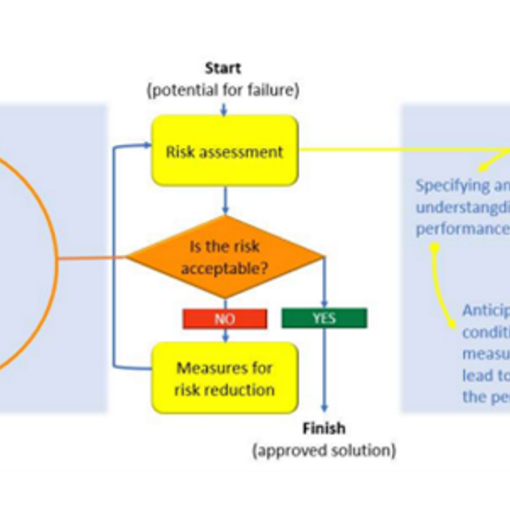

![[Alt text: screenshot of obscure data.]](https://blogit.lab.fi/labfocus/wp-content/uploads/sites/8/2024/09/739_2024_The-adoption-of-AI-coding-tools-among-IT-students-at-LAB-1024x477.jpg)

The first problem for students is that you can’t start from zero with generative AI. In general, if you know nothing of a given topic, you can’t be critical of the output, but if you are an actual expert, the output is not usually useful to you. These tools are most beneficial to those who are somewhere in between.

You need to understand the code at least on a level where you can give the system meaningful prompts. Trying to apply code without familiarity with the context generally doesn’t work and even if you manage to produce a working application with it, modifying that application is a daunting task.

However, if you have code, ChatGPT and similar tools are particularly good at explaining what it does. For example, ChatGPT can go through the code, line by line, explaining what each part of the code means and it can answer further questions. These tools can be helpful even if you never produce actual code with it.

Another effective way to use AI is in finding syntactical errors. Many implementations of programming languages try to identify these, but in many cases those messages might be meaningless, if you don’t have experience with the language. AI tools can easily fix these kinds of problems even if they don’t always correctly identify what they have fixed.

Finally, various tools for auto-completion of code can be helpful. Many find them disruptive, but others rely on these extensively, which is in line with professional attitudes in field in general (Stack Overflow 2024).

The field is still learning how to best use these tools and what that means for the students can be complicated. They have the opportunity to be on the edge of this new development, but if no-one really knows even the immediate future of these tools, learning them with little prior experience in the field can be difficult.

Openness is needed

In many cases even the professionals in the field find the various AI tools untrustworthy (Stack Overflow 2024), so how is someone new to the field supposed to adopt them? At the same time, the number of people using the tools is higher than the people who find the tools trustworthy (Stack Overflow 2024), which indicates that even the people who see the limitations of the tools still find them applicable to their work.

As in learning in general, the students need to be open to trying these technologies. Even if there are no ready-made answers to be given to them, having a confidence about using AI is going to be helpful, even if that means being critical of them.

Author

Aki Vainio works as a senior lecturer of IT at LAB and takes part in various RDI projects in expert roles. According to ChatGPT he has contributed significantly to online discussions and writings on topics like video games, information technology, and movies, but you shouldn’t necessarily believe that.

References

Arene. 2023. Arene’s recommendations on the use of artificial intelligence for universities of applied sciences. Arene. Cited 22 Mar 2024. Available at https://arene.fi/wp-content/uploads/PDF/2023/AI-Arene-suositukset_EN.pdf?_t=1686641192

Lightner, F. 2023. Will AI take your programming job. App Developer Magazine. Cited 15 Aug 2024. Available at https://appdevelopermagazine.com/Will-ai-take-your-programming-job/

Pathfinder. 2024. Welcome to the Erasmus+ Pathfinder Project. Netlify. Cited 15 Aug 2024. Available at https://erasmus-pathfinder.netlify.app/

Stack Overflow. 2024. 2024 Developer Survey. Stack Overflow. Cited 9 Sep 2024. Available at https://survey.stackoverflow.co/2024/ai

Strzelecki, R. 2023. Does Artificial Intelligence Threaten Programmers?. Forbes. Cited 15 Aug 2024. Available at https://www.forbes.com/sites/forbestechcouncil/2023/08/23/does-artificial-intelligence-threaten-programmers/

Trienpont International. 2023. How Severe Is The Global Shortage of Software Developers?. Medium. Cited 9 Sep 2024. Available at https://medium.com/@trienpont/how-severe-is-the-global-shortage-of-software-developers-9b99da78ca13

Zinkula, J. & Mok, A. 2024. ChatGPT may be coming for our jobs. Here are the 10 roles that AI is most likely to replace. Business Insider. Cited 22 Mar 2024. Available at https://www.businessinsider.com/chatgpt-jobs-at-risk-replacement-artificial-intelligence-ai-labor-trends-2023-02