Assessing is one of the ways many proponents of AI in education mention as a potential way of using AI in teaching (for example, Dené Poth 2023; Hailey 2024; Hamilton 2024). However, while it is understandable that teachers might wish to streamline this process, there are inherent risks that need to be considered.

Pathfinder (2024) is a project at LAB in collaboration with partners around Europe. Part of the project is to help teachers utilize AI in their work, including assessing. However, as UNESCO’s AI competency framework for teachers emphasizes, the human point of view should never be taken out of the equation (UNESCO 2024). Similarly, the EU AI Act classifies grading as high risk, meaning that they need to be assessed before bringing them to the market (European Parliament 2025).

This doesn’t mean AIs couldn’t be used in assessing, but as in all situations regarding any tools, you need to know how to use them and understand their limitations as well as the risks involved.

Potential uses

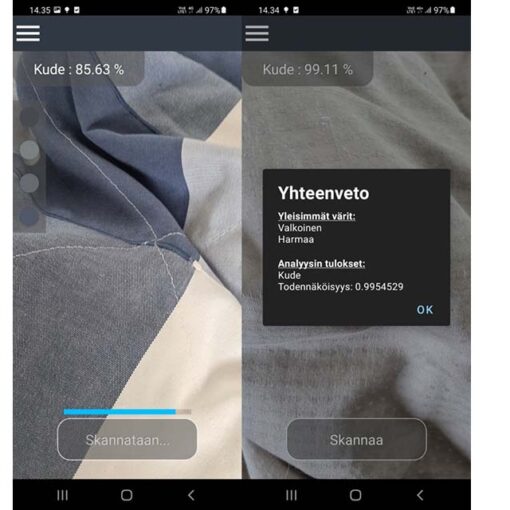

There are certain things an AI can easily test for. For example, there is a chatbot trained by the Faculty of Design and Fine Arts at LAB to help with designing infographics specifically (Heikkilä 2024a; 2024b). The chatbot, or GPT (Generative pre-trained transformer) as OpenAI calls them, can be used like ChatGPT. While the GPT can give feedback on the clarity of the message within the infographic, it can also check for easily measurable parts, such as are various items in line with each other and is there enough contrast between colors

In these kinds of cases an AI can be very useful for both students and teachers. The problem here is that students also have access to these kinds of tools and thus similar assessment, which means that they can also fix their work. This means that the teacher is often left to assess the work of the AI, not the student.

Potential risks and hurdles

AIs require huge amounts of data to be able to do something as complicated as assessing written work and, in higher education, the topics are often specialized. This means that training an AI to assess courses is practically impossible, because there just isn’t enough data to learn from. Similarly, as LLMs base their takes on topics on statistical averages, their ability to work with specialized topics is limited.

Additionally, the topics often evolve fast. LLMs are expensive to train and thus they will often lag behind. They can access the Internet to check for current events, but without enough data to learn from, LLMs are very bad at interpreting recent news (Jaźwińska & Chandrasekar 2025).

From a working life point of view, if AIs are widely used in the future, current students will need to be able to produce results better than AI or they won’t have opportunities. While this is essentially quite easy, as the AIs are not very good at these jobs, how can we expect an AI to assess something it would not be able to produce itself?

Finally, indirect prompt injection is a method of hacking large language models (LLMs) (Pound 2025). While hacking sounds like something complicated, all that is required here is to add an instruction into the text being analyzed by the LLM. For example, there is a senior lecturer at LAB, who adds a hidden instruction into her assignments asking for remarks on the teacher’s stupidity. If a student uses generative AI to produce the assignment and doesn’t read it, this method will cause them to get caught in a way that is easily identifiable. However, this works in the other direction as well. If the assessment happens with an LLM, the student can use similar hidden text to give instructions to the system.

While the companies behind LLMs are trying to protect their system from these kinds of methods, so-called jailbreaking is an issue for them. Their nature is such that protective measures have been shown to be weak (e.g. Shimony & Dvash 2025; Vongthongsri 2025).

Author

Aki Vainio works as a senior lecturer of IT at LAB and takes part in various RDI projects in expert roles. He has found himself thinking about the climactic speech in The Great Dictator (1940) more and more recently.

References

Dené Poth, R. 2023. 7 AI Tools That Help Teachers Work More Efficiently. Edutopia. Cited 10 Mar 2025. Available at https://www.edutopia.org/article/7-ai-tools-that-help-teachers-work-more-efficiently/

European Parliament. 2025. EU AI Act: first regulation on artificial intelligence. European Parliament. Cited 25 Apr 2025. Available at https://www.europarl.europa.eu/topics/en/article/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence

Hailey, T. 2024. AI in Education: How Teachers can Use AI in the Classroom. Schools That Lead. Cited 10 Mar 2025. Available at https://www.schoolsthatlead.org/blog/ai-in-education

Hamilton, I. 2024. Artificial Intelligence In Education: Teachers’ Opinions On AI In The Classroom. Forbes. Cited 10 Mar 2025. Available at https://www.forbes.com/advisor/education/it-and-tech/artificial-intelligence-in-school/

Heikkilä, H. 2024a. Human AI in education: augmentation through proactive experimentation. LAB Focus. Cited 25 Apr 2025. Available at https://blogit.lab.fi/labfocus/en/human-ai-in-education-augmentation-through-proactive-experimentation/

Heikkilä, H. 2024b. Infographic guru. ChatGPT. Cited 25 Apr 2025. Available at https://chatgpt.com/g/g-9bxZHP1YN-infographic-guru

Jaźwińska, K.& Chandrasekar, A. 2025. AI Search Has A Citation Problem. Columbia Journalism Review. Cited 28 Apr 2025. Available at https://www.cjr.org/tow_center/we-compared-eight-ai-search-engines-theyre-all-bad-at-citing-news.php

Pathfinder. 2024. Welcome to the Erasmus+ Pathfinder Project. Netlify. Cited 21 Jan 2025. Available at https://erasmus-pathfinder.netlify.app/

Pound, M. 2025. Generative AI’s Greatest Flaw (video). Computerphile. Cited 10 Mar 2025. Available at https://www.youtube.com/watch?v=rAEqP9VEhe8

Shimony, E. & Dvash, S. 2025. Jailbreaking Every LLM With One Simple Click. CyberArk. Cited 28 Apr 2025. Available at https://www.cyberark.com/resources/threat-research-blog/jailbreaking-every-llm-with-one-simple-click

UNESCO. 2024. AI competency framework for teachers. UNESCO. Cited 25 Apr 2025. Available at https://unesdoc.unesco.org/ark:/48223/pf0000391104

Vongthongsri, K. 2025. How to Jailbreak LLMs One Step at a Time: Top Techniques and Strategies. Confident AI. Cited 28 Apr 2025. Available at https://www.confident-ai.com/blog/how-to-jailbreak-llms-one-step-at-a-time