The research and utilization of existing Artifical Intelligence and Computer Vision based technologies plays a significant role in the development of smart 3rd party solutions for the CitiCAP project at the Lab University of Applied Sciences. As a part of the RDI process the CitiCAP team regularly researches and tests new hardware and software components that can help the project to achieve its goals.

This time, togheter with the Tekoälypaja project, our team decided to check out the latest generation of the Microsoft motion and depth sensing cameras called Azure Kinect.

For the CitiCAP project the Azure Kinect device is a part of the larger research to explore the capabilties of different smart devices in the Human Detection and Body Tracking tasks. In the future this and similar technologies can be used for example to monitor pedestrian traffic and detect possible accidents on a smart bicycle route from the Lahti Travel Centre to Ajokatu (City of Lahti 2020).

What is Azure Kinect and what can it be used for?

The Azure Kinect is the third generation of Microsoft Kinect devices. Unlike its precessors the Azure Kinect is an enterprise oriented technology and a fully functional Development Kit for software applications. Being tightly integrated into Microsoft Azure Cloud Platform and its list of Cognitive Services Azure Kinect provides many useful tools for AI, Computer Vision and Speech Recognition tasks. (Microsoft Azure 2020a.)

The device comes in a package with 12-megapixel RGB camera, 1-megapixel Depth Sensor and a spatial microphone array on top (Microsoft Azure 2020b). Combining these sensors and cameras together makes it easy to capture and analyse objects in 3D space even in complete darkness.

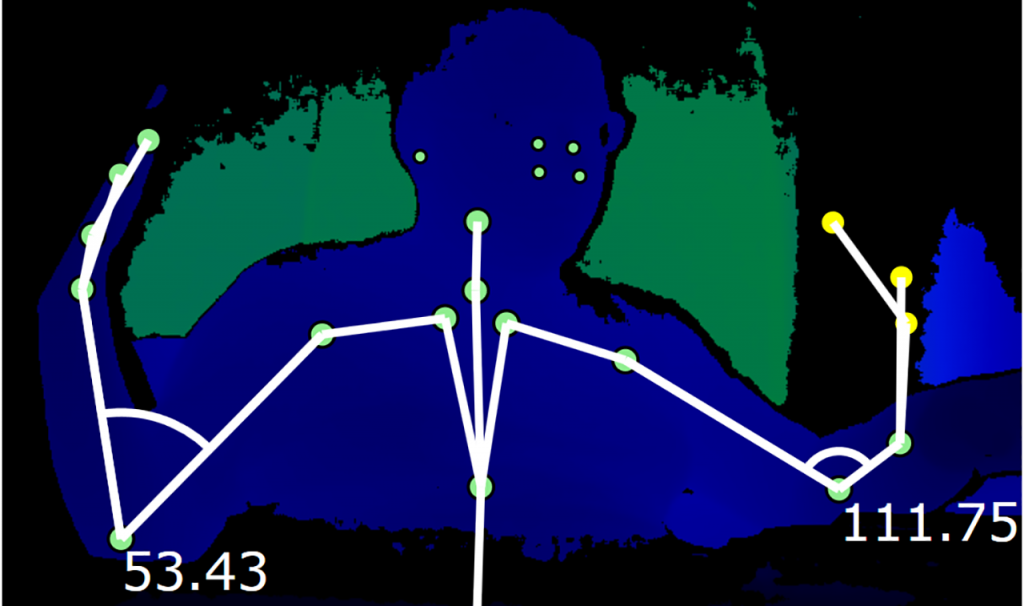

For easier development Microsoft supports Azure Kinect device with two Software Development Kits known as Sensor and Body Tracking SDK. The Sensor SDK enables low-level access to the device control and configuration settings. For example, it can be used to start device and take both color and depth images from the cameras. The second and much more important one is the Body Tracking SDK that can be used together with the Azure Kinect cameras to track human bodies in 3D environment and then extract and work with the data they provide. Currently the Body Tracking SDK can track up to 32 body joints from the image. (Microsoft 2020.)

Development of Computer Vision application with Azure Kinect

The main point of interest for the CitiCAP project team was the ability of the Azure Kinect device to detect human bodies and extract body joints. The later one is especially important because it can be used to evaluate body poses directly from the image or video stream. The correct prediction of the body pose can then be used to estimate possible road accidents such as falls and collisions between pedestrians and bicycle drivers.

The result of this research was a software desktop application prototype based on the Azure Kinect Depth Sensor capabilities and Body Tracking SDK. The application was able to use Azure Kinect camera and Depth Sensor to detect humans, extract bodies from the image or video background, draw skeletons, return body-joint coordinates and calculate angles and interactions between selected body parts.

In the CitiCAP project the application was successfully tested along others as a part of the Fall Estmiation service.

What’s next?

With the Azure Kinect Body Tracking prototype on hands there are a lot of possibilities to move forward. One for example can be a full integration with Azure Cloud and its list of Cognitive Services. This will allow CitiCAP team to improve Kinect AI prediction results even further. Another one is an actual pose and gesture estimation based on the body joints and calculated angles from Azure Kinect DK: two features that we already can use.

Author

Jevgeni Anttonen works as a developer and researcher in the CitiCAP project, RDI AI Lab team at LAB University of Applied Sciences.

References

City of Lahti. 2020. CitiCAP-project. [Cited 1 October 2020]. Available at: https://www.lahti.fi/en/services/transportation-and-streets/citicap

Microsoft Azure. 2020a. Azure Kinect DK. [Cited 1 October 2020]. Available at: https://azure.microsoft.com/en-us/services/kinect-dk/

Microsoft Azure. 2020b. Cognitive Services. [Cited 1 October 2020]. Available at: https://azure.microsoft.com/en-us/services/cognitive-services/#overview

Microsoft. 2020. Azure Kinect DK documentation. [Cited 1 October 2020]. Available at: https://docs.microsoft.com/en-us/azure/kinect-dk/about-azure-kinect-dk

Links

Link 1. City of Lahti. 2020. CitiCAP-project. [Cited 1 October 2020]. Available at: https://www.lahti.fi/en/services/transportation-and-streets/citicap

Link 2. Tekoälypaja. 2020. Artificial intelligence & Machine vision in co-operation. [Cited 1 October 2020]. Available at: https://www.tekoalypaja.fi/in-english/

Link 3. Microsoft Azure. 2020. Azure Kinect DK. [Cited 1 October 2020]. Available at: https://azure.microsoft.com/en-us/services/kinect-dk/

Link 4. Microsoft Azure. 2020. Cognitive Services. [Cited 1 October 2020]. Available at: https://azure.microsoft.com/en-us/services/cognitive-services/#overview

Link 5. Anttonen, J. 2020. Pedestrian Detection Service as an Instrument to improve Sustainable Mobility. LAB Focus. [Cited 9 October 2020]. Available at: https://blogit.lab.fi/labfocus/en/pedestrian-detection-service-as-an-instrument-to-improve-sustainable-mobility/

Link 6. LAB. 2020. CitiCAP – Citizens Cap and Trade co-created. [Cited 9 October 2020]. Available at: https://lab.fi/fi/projekti/citicap-citizens-cap-and-trade-co-created

Pictures

Picture 1. Jevgeni Anttonen. 2020. Azure Kinect device: connected and ready to use.

Picture 2. Jevgeni Anttonen. 2020. Usage of Azure Kinect DK and Depth Sensor to track human body joints and calculate angles.Sivunvaihto